Overview

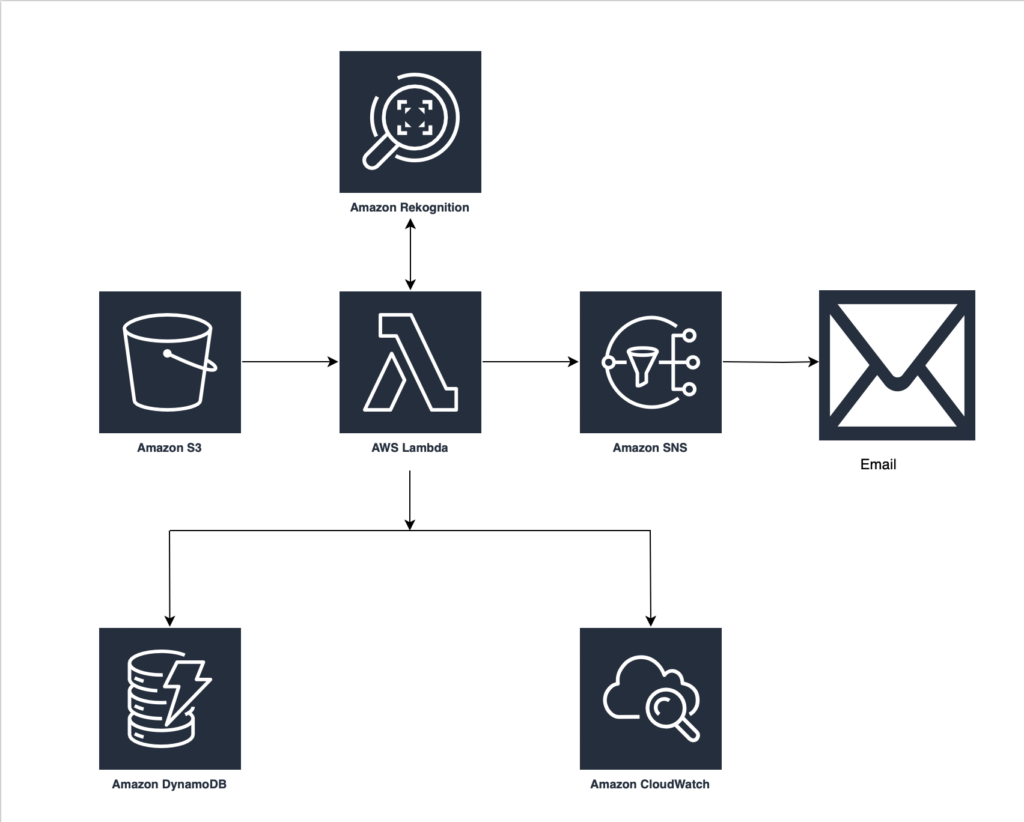

The VisionVault AI Image Analyzer is a serverless tool that automatically processes every new image and pulls out useful details the moment it’s uploaded. It taps into several AWS services to create a scalable, low-cost, event-driven setup for image analysis, structured-metadata storage, and instant alerts when certain content appears.

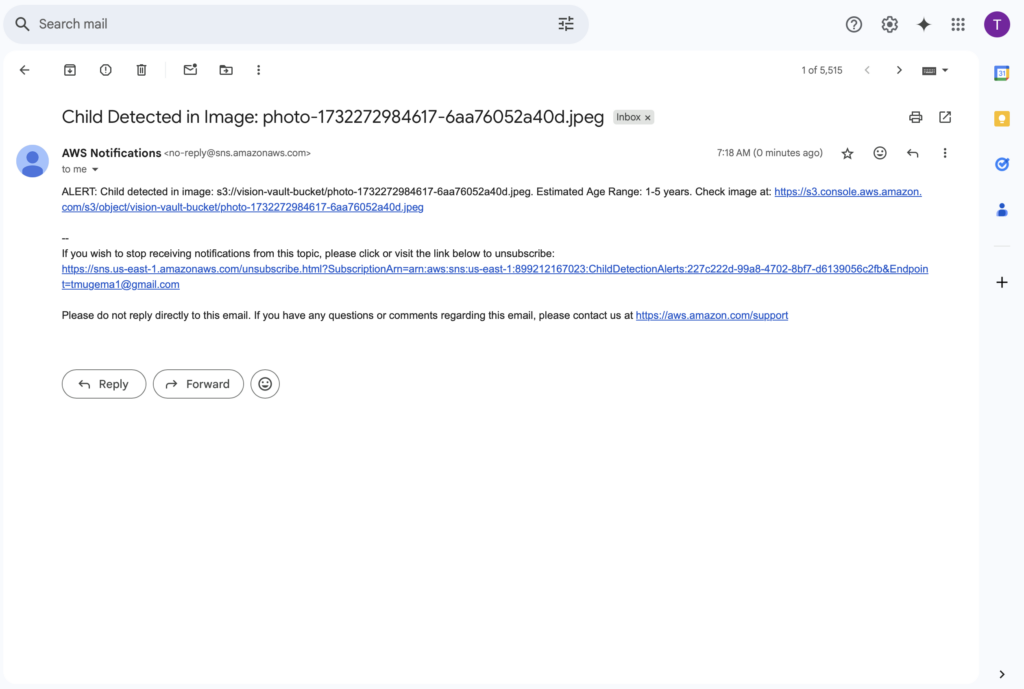

My goal was to build a pipeline that watches an S3 bucket and emails me whenever it sees a photo of a child under ten. I ended up using seven different AWS services, which gave me solid hands-on practice. Below, I’ll share how I built it, the challenges I faced, and the lessons I learned along the way.

AWS Services Used

As I stated earlier, I used seven services to complete this project. These include:

- Amazon S3: S3 was used as the primary storage for raw images and serves as the initial trigger for processing.

- AWS Lambda: Lambda was use as the serverless compute solution to process S3 events, performs image analysis using Rekognition, stores results in DynamoDB, and sends SNS alerts. So, the core logic of this solutions was handled by lambda. I used python to write the code for this lambda function.

- Amazon Rekognition: AI service for analyzing images and videos ( detect labels, text, faces, and more). In this project, the Lambda function used the APIs provided by Rekognition to handle the image recognition tasks.

- Amazon DynamoDB: NoSQL database for storing the structured metadata extracted from images.After image recognition processing, all the data extracted from the image was stored in a DynamoDB database table.

- Amazon SNS (Simple Notification Service): Used for sending real-time email alerts when specific content is detected. For this project, this service was used to send notifications to my email whenever an image of a child below ten years was detected.

- Amazon CloudWatch: Comprehensive monitoring and logging for all AWS services in the pipeline. I used this to view the logs for my lambda function to troubleshoot any errors in case something did not go write.

Design Choices: VisionVault Setup Overview

This section explains how above AWS services were used to power VisionVault AI Image Analyzer and why they were chosen for this specific use case.

Amazon S3: Image Upload and Event Triggering

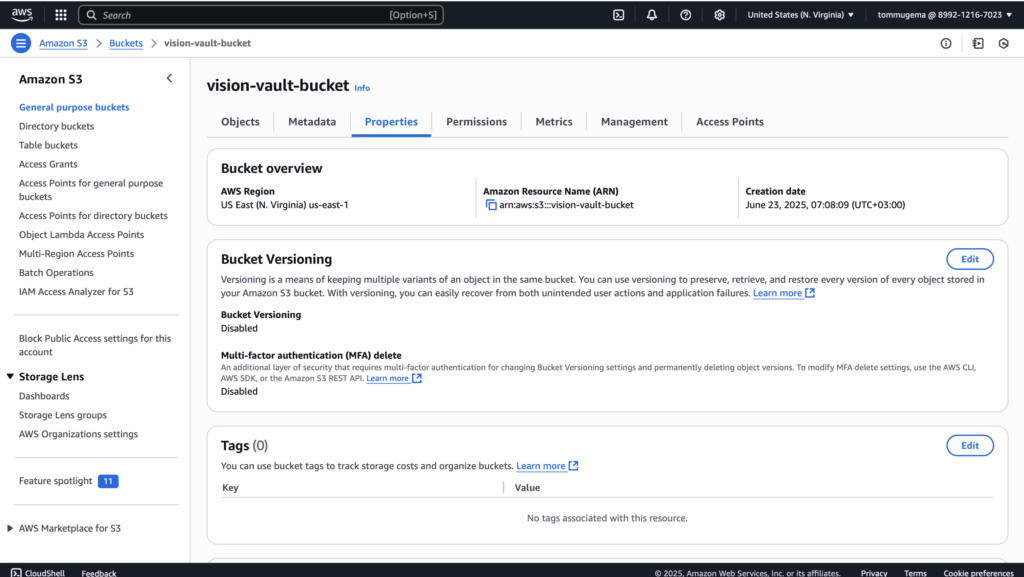

Amazon S3 was used as the entry point for the system. It acts as the storage layer for all uploaded images that need to be analyzed. When a user uploads an image (in this case it is me who did it), S3 automatically triggers an event. This event is configured to invoke a Lambda function, eliminating the need for extra orchestration tools. To get started, I created an S3 bucket (vision-vault-bucket) that will store all the images that we upload.

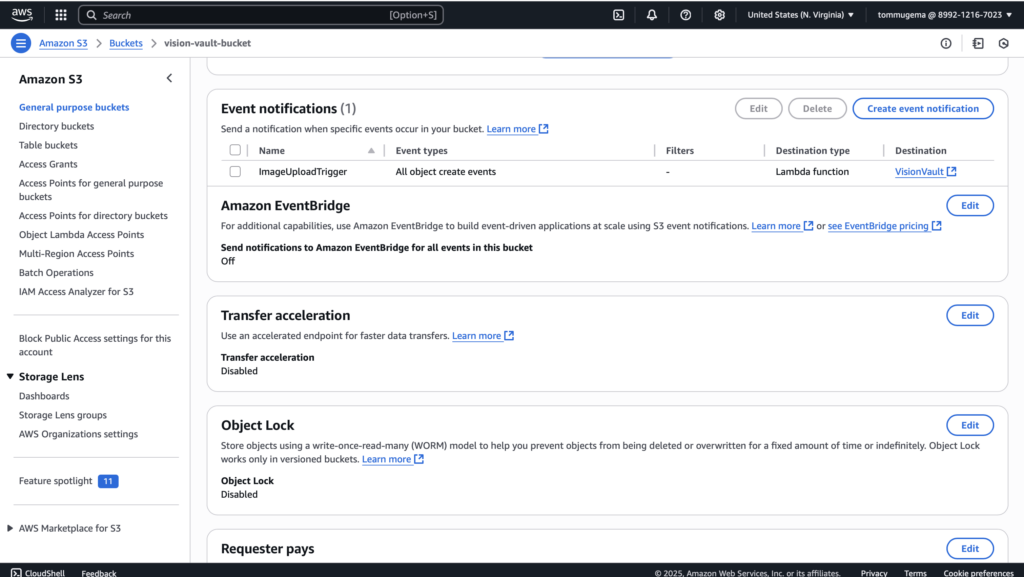

To automate the flow, I used S3 Event Notifications to trigger the Lambda function whenever a new image is uploaded. By linking “All object create events” to the lambda function, every upload, whether it’s a direct PUT, copy, or multipart upload, immediately kicks off the analysis.

AWS Lambda: Image Analysis and Orchestration

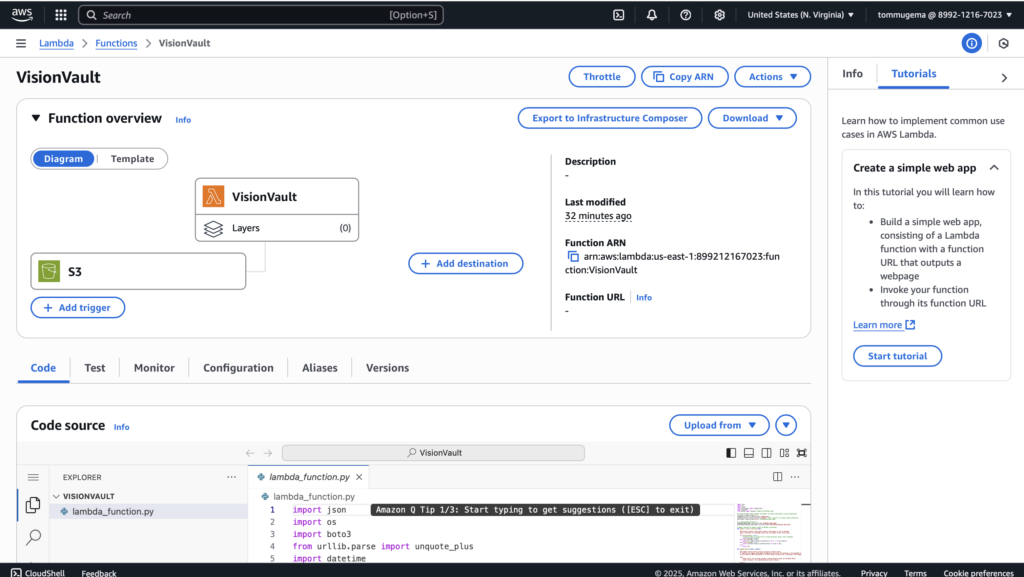

The actual image analysis and response generation happen inside a Lambda function called VisionVault. Serverless made sense for this project since the image processing task was brief and only happens when a new image is uploaded. Lambda offers the flexibility to run compute only when needed, helping keep costs down.

I created the VisionVault function in AWS Lambda by selecting “Author from scratch” and using Python 3.9 runtime (as shown in the above screenshot). I chose the default x86_64 architecture but arm64 is also an option that I could use to save costs. For security, I set up a new execution role with basic Lambda permissions. I later added more permissions as shared below.

Instead of hardcoding resource names, I used environment variables in the Lambda configuration to store the DynamoDB table name (DYNAMODB_TABLE_NAME) set to VisionVault and the SNS topic ARN (CHILD_DETECTION_SNS_TOPIC_ARN) set to the ChildImageAlerts topic’s ARN. This approach makes the code easier to manage, update, and reuse without changing the source.

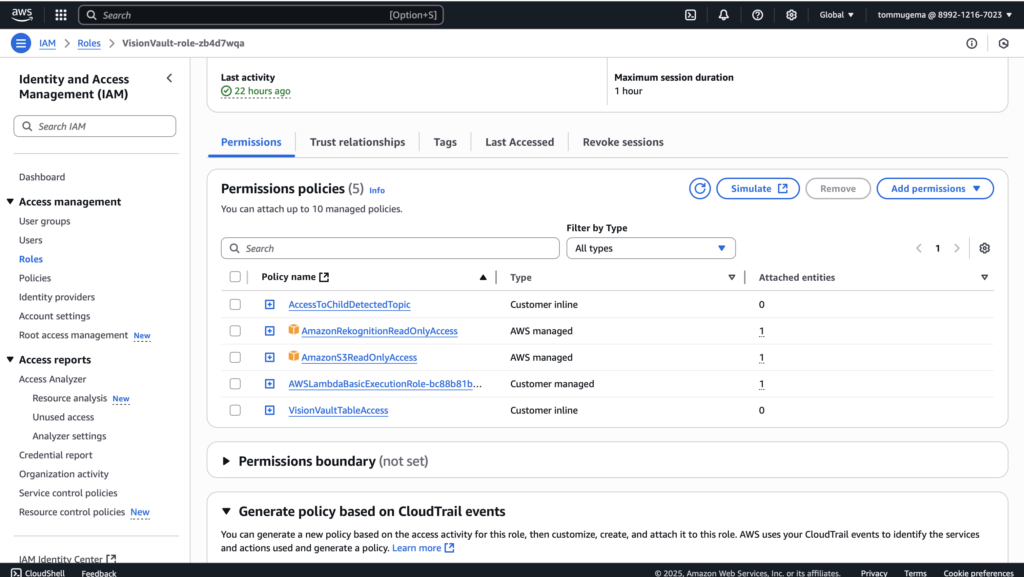

Like I shared earlier, Lambda is triggered by the S3 event and runs the Rekognition API calls. It reads the image from S3, analyzes it, and pushes the results to DynamoDB. I also carefully added IAM permissions to ensure the function only had access to what it needed, including S3 read, Rekognition read, DynamoDB write, and SNS access (as shown above). While AWS provides full-access policies, I chose to use least-privilege permissions to follow security best practices.

Amazon DynamoDB Table Setup

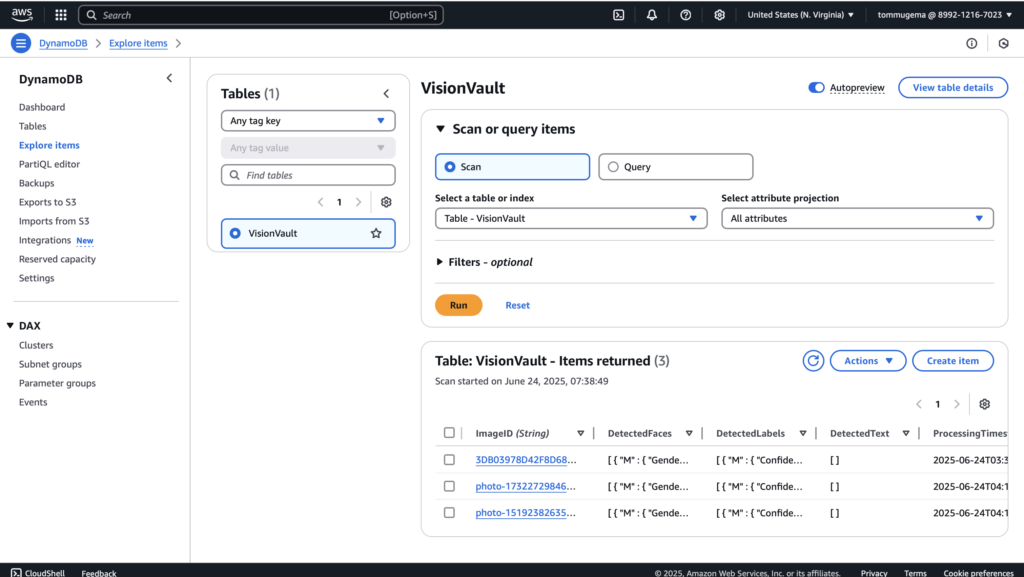

The VisionVault DynamoDB table stores the results of image analysis. I created the table using the AWS DynamoDB console, naming it “VisionVault” with a partition key called ImageID (string type). For this project, I kept other settings at their default values, including on-demand capacity mode, to simplify the initial setup. This table serves as the main database to hold and quickly retrieve analyzed data from images processed by the Lambda function.

Amazon SNS Topic Setup

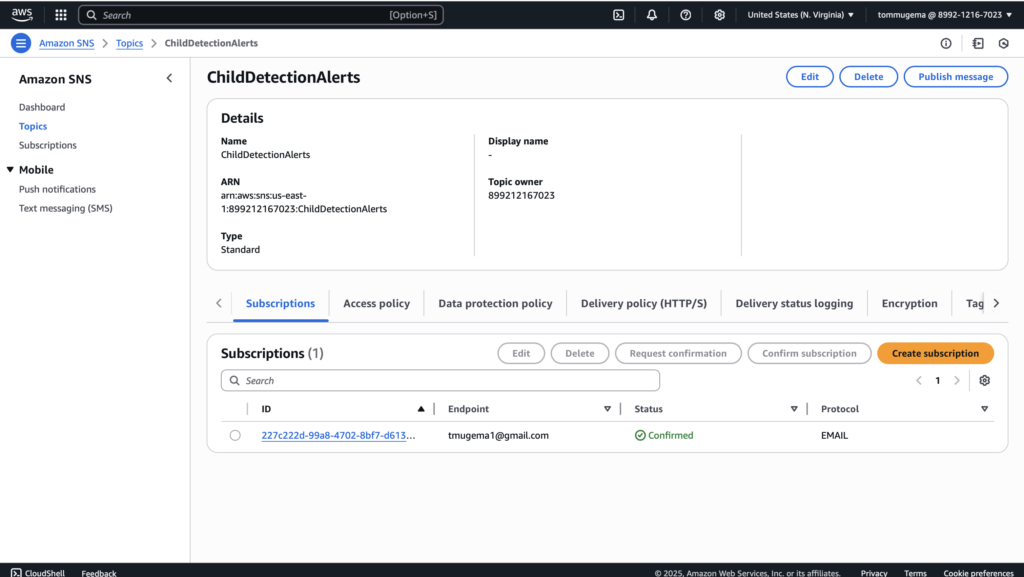

To get real-time alerts when child images are detected, I set up an Amazon SNS Standard topic called ChildDetectionAlerts. I created this standard (not FIFO) topic through the AWS SNS console since email subscriptions require standard topics. After creating the topic, I added an email subscription using my own email address and confirmed the subscription by clicking the confirmation link sent by AWS.

This SNS topic allows the Lambda function to send immediate email notifications, helping me stay informed of important detections. If the images uploaded or something else besides a child, no notifications are sent to my email (as shown in the above screenshot).

Monitoring Lambda Function with Amazon CloudWatch

To track the performance and health of the VisionVault Lambda function, I used Amazon CloudWatch. CloudWatch automatically collects logs and metrics from the Lambda executions, allowing me to monitor its behavior and quickly identify any errors or issues. In fact, I used it to troubleshoot a permissions error that was preventing the Lambda from sending data to our DynamoDB table.

Challenges and Lessons Learned

Developing this serverless image analysis pipeline highlighted several common challenges in AWS and provided valuable lessons. Let me share a few of these.

DynamoDB’s Numeric Type Requirement (Floats vs. Decimals)

Attempting to store Rekognition’s confidence scores (which are floats) directly into DynamoDB led to a TypeError since float types are not supported.

Lesson Learned: DynamoDB strictly prefers the Decimal type for numbers to ensure exact precision, avoiding potential floating-point inaccuracies. This is a critical detail for applications dealing with financial data, measurements, or any numeric values where exactness is important.

AWS IAM Permissions

The Lambda function initially encountered an AccessDeniedException when attempting to perform dynamodb:PutItem. I was able to identify this error by reviewing the logs in Amazon CloudWatch.

Lesson Learned: Always review and configure permissions for all the services a Lambda function (or any other AWS service) needs to access.

SNS Topic Types (Standard vs. FIFO) and Protocol Compatibility

When attempting to subscribe an email to an SNS topic for child detection alerts, the “Email” protocol option was missing. This was traced back to the topic being a FIFO type.

Lesson Learned: SNS topics have distinct types (Standard vs. FIFO) with different guarantees and, consequently, different feature sets and compatibilities. FIFO topics provide strict ordering and deduplication but limit supported subscription protocols (e.g., no direct email).

Thanks for the clarification! Here’s the revised conclusion with that adjustment:

Conclusion

Working on the VisionVault AI Image Analyzer project gave me hands-on experience building a serverless image processing pipeline using multiple AWS services. I used S3 to trigger the lambda on image upload, Lambda to run the core logic, Rekognition for analyzing images, DynamoDB to store results, and SNS to send real-time alerts.

I faced and resolved challenges like IAM permission errors and data handling issues, which helped me better understand how to properly configure roles and use CloudWatch for debugging.

While the system works well as designed, one possible improvement would be to add API Gateway to securely expose an upload endpoint. This is crucial security approach especially if the solution is to be used outside of AWS or integrated with web or mobile apps.

Overall, this project gave me practical insight into building and managing event-driven applications on AWS.